Applications of Neural Networks

Nathanael O'Donnell

Neural Networks - An Introduction

In comparison with most other machine learning techniques—including linear regression and logistic regression—neural networks are significantly more complex. The simplest way of explaining neural networks is as multi-dimensional logistic regression, in which the "layers" of the neural network representing additional transformations. Somewhat intuitively (because they are based on logistic regression), neural networks, in their more basic forms, are classification models. However, more complex neural networks (those with more neurons) can serve as regression models as well.

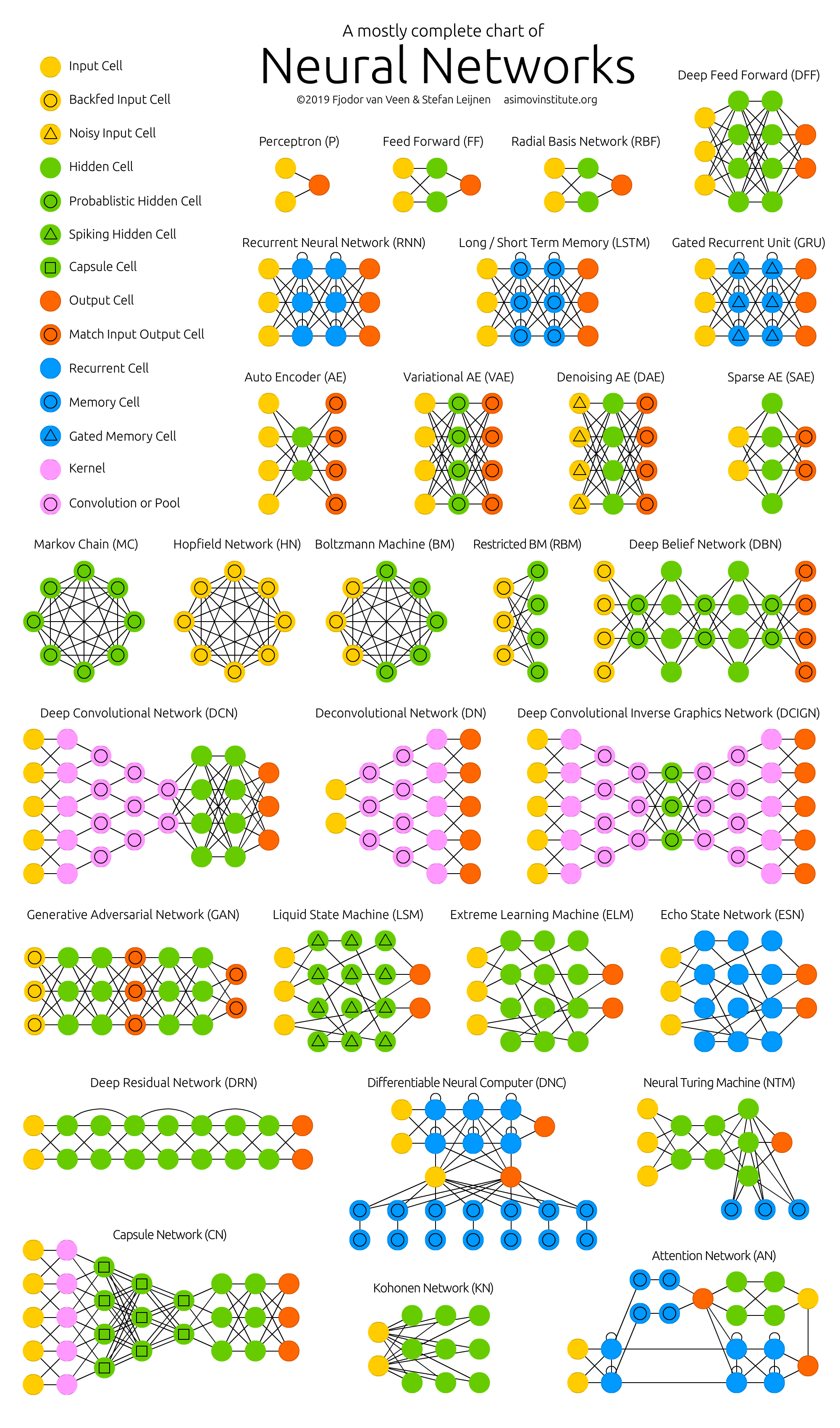

So if neural networks are so much more complicated than other machine learning methods, then why do practitioners use them? The simple answer is that they work better than other approaches in many situations. The complexity of neural networks is what makes them such a flexible and powerful approach for more complex problems. Neurons can be added or removed, as can layers, giving rise to an infinite variety of "architectures" (pictured below) that tackle specific problems or categories of problems.

|

| Source: The Asimov Institute |

Applications of Neural Networks

The applications of neural networks are practically infinite; at present, neural networks have been used to solve problems as diverse as predicting stock prices and beating human players at popular video games. Below, I will discuss several specific examples of problems that neural networks have been used to solve:

Example 1: Handwriting Recognition

If you've ever used a product like Google Lens to translate a photo of handwriting into text, then you've made use of a neural network for handwriting recognition. Each layer of the neural network refines the model's "guess" of what each handwritten letter is, and the final layer of the neural network splits into 36 neurons (one for each letter and digit from 0-9). A simplified visualization of the network—classifying numeral digits only—might look like this:

Source: Neural Networks and Deep Learning, Chapter 1

Example 2: AlphaGo and AlphaZero

A very famous example of the power of neural networks is AlphaGo, the neural network model developed by Google's DeepMind group. AlphaGo is actually composed of two neural networks, one which selects the next move, and the other which predicts the winner of the game. The neural network was trained by "watching" thousands of amateur games, and then by playing games against itself. In 2016, AlphaGo defeated the current Go world champion, and in 2017 a more general model—AlphaZero—was developed to master a variety of games, including chess and popular video games. Although AlphaGo and AlphaZero are not the most practical applications of neural networks, they are striking examples of how powerful neural networks can be.| A human player against AlphaGo - Source: DeepMind |

Example 3: Self-Driving Cars

There is a great deal of controversy surrounding the development of self-driving cars, but there is no controversy around the best machine learning framework for developing the algorithms that power them: deep neural networks. The "deep" in "deep learning" simply refers to the fact that these neural networks have many "hidden layers" between the input and output layers of the network. Adding more layers, generally speaking, enables the network to tackle problems of greater complexity. And a self-driving car is about as complex as a machine learning problem can get, at least in 2020.

To date, the most successful applications of neural networks for self-driving cars have been developed by Alphabet's Waymo and Tesla. Waymo's model is perhaps the most technically sophisticated, with millions of driverless miles logged in the U.S.

:format(webp):no_upscale()/cdn.vox-cdn.com/uploads/chorus_asset/file/10804043/parallelparking.png)

Comments

Post a Comment